Spindox develops a predictive model of data center electricity consumption. Artificial intelligence contributes to the efficiency of ICT. The ultimate goal is also savings and environmental sustainability.

Since 2016 DeepMind – Google’s AI company – has been developing prediction and optimization models for data center energy consumption. In particular, the algorithms have been put in place to help reduce the bill component related to server cooling. DeepMind’s client is Google herself, who manages one of the largest and most energy-intensive computing infrastructures in the world. Google has said that it has achieved excellent results, with savings of 40%. In the DeepMind blog, Chris Gamble and Jim Gao spoke about this project in a post dated August 17 (Safety-first AI for autonomous data centre cooling and industrial control).

Spindox’s cognitive computing team trained on a similar topic. In our case we have designed a forecast model of the annual electricity consumption of the data centers for an important global telephone operator, according to the voice traffic, data traffic and the technical/energy characteristics of the data center, for the different countries where our customer operates.

How we worked: datasets and models

Energy consumption was divided into three factors: core module, local data center, global data center. In particular, a dataset corresponding to five-year consumption was examined. The data include:

- Annual data traffic [PB]

- Annual voice traffic in millions of minutes [Mmin]

- Annual PUE: energy consumption efficiency index (we talk about it later in the article)

- Annual consumption for the core part [KWh]

- Annual consumption for the local data center [KWh]

- Annual consumption for the global data center [KWh]

Since not all countries have consumption for all three components, it was decided to divide the original dataset into three datasets, one for each variable (CoreTotal, LocalDataCenter, GlobalDataCenter) and design a model for each variable.

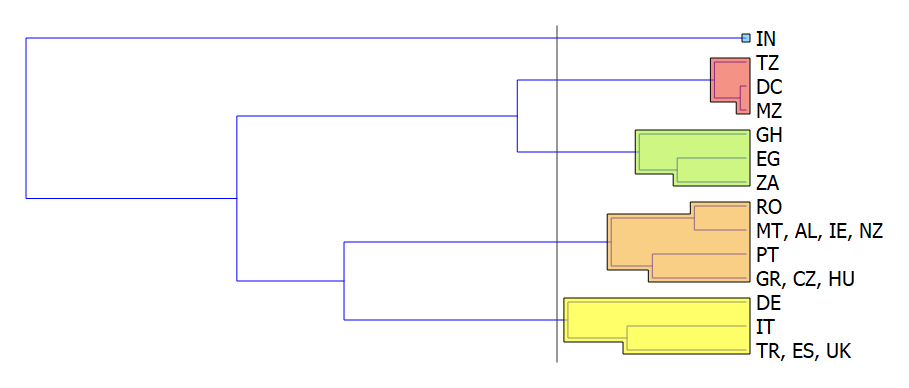

By analyzing the distribution of the CoreTotal variable with respect to voice and data traffic, it can be seen that a relationship between the variables cannot be deduced from the original data (Fig. 1 – left). The countries were then grouped through a clustering algorithm on traffic data and PUE 2014 – 2018 and aggregated data by cluster (Fig. 2). This reduces the variance of the dataset and makes the relationships between the variables stand out more (Fig. 1 – right). On the aggregate data, a regression algorithm (Elastic-Net Regression) was then used on each of the five clusters identified.

Plan to save. And then?

What prospects does the forecasting model created by Spindox open? Obviously, the context of a data center is very complex, for the variables involved and for the many existing constraints. We are only at the beginning of this reasoning, which can consider artificial intelligence at the service of energy saving and sustainability. The recovery of efficiency of a data center should not be seen only in a merely economic perspective. Sustainability can become an engine for the development of the future and guide our choices. For those who, like us, feel they have a digital soul, the theme could not be hotter. Yes, because we have now understood that the world of new technologies if on the one hand can offer a decisive contribution to the resolution of some problems, on the other hand it itself is the cause of many problems.

The fact that ICT contributes to the emission of greenhouse gases worldwide more than air transport could be surprising. Yet it is so. The carbon footprint of information & telecommunication technology corresponds to 2% of the total CO2 equivalent emissions (Nicola Jones, How to stop data centres from gobbling up the world’s electricity, “Nature”, 13 September 2018). Of course, the data refers to the IT and telecommunications sector in its broadest sense. In that 2% there is not only the software, therefore. There are also personal and household electronic devices, such as smartphones and televisions, as well as infrastructure for telephone and radio and television broadcasting.

200 terawatt hours per year

We know that digital is not synonymous with immaterial. Behind the Net – sometimes conceived as something abstract and without substance – there is a very concrete set of artifacts: cables, transmitters, antennas, servers, terminals, routers and switches. In this sense, there is another, even more significant, data concerning the energy impact of data centers only. In 2018, data centers are estimated to have consumed 200 terawatt hours (TWh), contributing 0.3% of the total CO2 equivalent emissions (if you always see the article by Nicola Jones already mentioned).

It could be argued that that 2% is small. However, there are two facts that should not be forgotten. Initially, 2% of emissions come from enormous electricity consumption. The second point is that this consumption will grow a lot in the coming years.

10% of consumption from ICT, but tomorrow it will be worse

The global electricity demand in 2018 was 23 thousand TWh, with a growth of 4% compared to 2017 (source: IEA, Global Energy & CO2 Status Report). Recall that a TWh equals one billion kilowatt hours (KWh) and that an Italian family of four absorbs an average of 3,300 KWh per year. Well, the ICT sector absorbs about 10% of this huge amount of energy. A bit like saying that, with the electricity consumed by ICT in one year, we could perform 1,500 billion washing cycles with a modern dishwasher. Or keep over 87 billion 3 Watt bulbs in operation for the entire year.

To worry is the trend. In a pessimistic, but possible scenario, the use of electricity by ICT would exceed 20% of the world total within the next decade. And the data centers would become responsible for one third of this consumption (Jens Malmodin, Dag Lundén, The Energy and Carbon Footprint of the Global ICT and E&M Sectors 2010–2015, “Sustainability”, 10-9, 2018, 3027). Then imagine the impact resulting from further growth of Bitcoin, whose cryptographic mechanisms require an abnormal energy expenditure.

Not everyone shares such a dramatic vision. The growth in the demand for data does not correspond to an equally sustained growth in the demand for electricity. As proof of the fact that the increase in Internet traffic and the load of data is counterbalanced by greater efficiency of the infrastructures. All of them, however, suggest a vigilant attitude and recommend continuing with determination along the path of energy saving.

The era of green data centers

Today we talk a lot about hyperscale data centers. These are huge infrastructures, which can hold up to 250 thousand servers. The largest hyperscale data center is owned by Microsoft and is located in Chicago, United States. It has an area of 65 thousand square meters, contains over 38 thousand kilometers of cables and has cost 500 million dollars. Microsoft itself operates three other hyperscale data centers, while Google even has 12. As for Facebook, in addition to its four hyperscale data centers, the Menlo Park company is planning a mega-hyperscale data center in Singapore. It will be an 11-storey structure and over 167 thousand square meters of surface.

Jason Waxman, The Future of Hyperscale Computing: Technologies That Will Disrupt the Data Center

Infrastructures of this type reach levels of energy efficiency much higher than traditional data centers. The savings obtained by the hyperscale data centers are measured in power usage efficieny (PUE) which is defined by calculating the ratio between the overall energy – necessary for the operation of the entire infrastructure, including lights and cooling – and the energy used for the calculation . Nicola Jones writes: “Conventional data centers have a PUE of about 2.0; in hyperscale data centers this value has been reduced to around 1.2. Google, for example, boasts a PUE of 1.12 on average for all its centers”.

The problem of cooling and water

Is this the only way for green computing? A way that, as it is easy to understand, only large cloud infrastructure providers can follow, with the result of achieving an additional competitive advantage thanks to their formidable investment capabilities. Certainly there are other aspects on which it will be necessary to work a lot. Starting with the question of cooling. Today, in a traditional data center, air conditioning affects up to 40% of the energy bill. Not to mention the consumption of water, which is necessary to operate the cooling systems and which causes further environmental problems. A study a few years ago came to determine that in 2014 the consumption of water by data centers in the United States (Bora Ristic, Kaveh Madani, Zen Makuch, The Water Footprint of Data Centers, “Sustainability”, 7, 8, 2015 , 11260-11284) had already reached 100 billion liters.